| Xw | Yw | Zw | Yi | Zi |

| 0 | 0 | 0 | 100 | 36 |

| 1 | 0 | 2 | 90 | 72 |

| 1 | 0 | 4 | 90 | 112 |

| 0 | 1 | 5 | 117 | 131 |

| 0 | 3 | 7 | 154 | 168 |

| 0 | 4 | 4 | 171 | 106 |

| 0 | 3 | 2 | 152 | 69 |

| 0 | 6 | 1 | 205 | 44 |

| 0 | 5 | 8 | 191 | 185 |

| 4 | 0 | 7 | 57 | 164 |

| 5 | 0 | 4 | 46 | 100 |

| 4 | 0 | 1 | 58 | 42 |

| 2 | 0 | 9 | 80 | 208 |

| 7 | 0 | 2 | 23 | 52 |

| 6 | 0 | 8 | 35 | 181 |

| 0 | 7 | 6 | 228 | 142 |

| 0 | 4 | 10 | 173 | 227 |

| 5 | 0 | 10 | 47 | 225 |

| 0 | 2 | 9 | 137 | 208 |

| 0 | 5 | 2 | 188 | 65 |

| 0 | 6 | 5 | 209 | 123 |

| 6 | 0 | 6 | 35 | 139 |

| 0 | 6 | 10 | 211 | 225 |

| 3 | 0 | 5 | 69 | 125 |

| 7 | 0 | 11 | 24 | 244 |

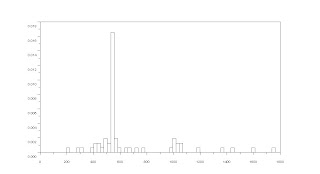

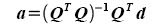

Using these data we setup an appended matrix containing the some of the elements in the equation below.

The appended matrix of the leftmost matrix would be the matrix Q. The appended matrix of the rightmost matrix would be the matrix d. What we want to find is second matrix in the equation which we denote as matrix a. We do this by applying the equation below.

For my case I used excel to calculate and to construct the matrix but you could use other methods as well such as calculating it in scilab. I would not show the appended matrices but only the calculated matrix a.

Matrix a

Matrix a

| -1.12E+01 |

| 16.47929 |

| -3.72E-02 |

| 9.93E+01 |

| -3.82E+00 |

| -2.28E+00 |

| 1.88E+01 |

| 3.67E+01 |

| -1.20E-02 |

| -6.07E-03 |

| -2.53E-03 |

| 1 |

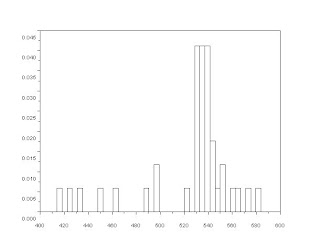

Now we examine if these are correct. We use the equation

We use this to our previous data and try to see if we get the same [Yi, Zi] coordinates. The Table below shows the results and their difference from the known values.

| Yr | Zr | Yi | Zi | delta Y | delta Z | |||||

| 99.26059921 | 36.6903502 | 100 | 36 | 0.739401 | 0.69035 | |||||

| 89.53561712 | 71.7207949 | 90 | 72 | 0.464383 | 0.279205 | |||||

| 89.92225298 | 110.57204 | 90 | 112 | 0.077747 | 1.42796 | |||||

| 117.7563384 | 130.931302 | 117 | 131 | 0.756338 | 0.068698 | |||||

| 153.9647225 | 167.560105 | 154 | 168 | 0.035278 | 0.439895 | |||||

| 170.9055698 | 106.477688 | 171 | 106 | 0.09443 | 0.477688 | |||||

| 152.1637482 | 69.0766455 | 152 | 69 | 0.163748 | 0.076645 | |||||

| 206.1266372 | 43.4976498 | 205 | 44 | 1.126637 | 0.50235 | |||||

| 191.0174924 | 185.15496 | 191 | 185 | 0.017492 | 0.15496 | |||||

| 58.10852607 | 163.864721 | 57 | 164 | 1.108526 | 0.135279 | |||||

| 46.48536116 | 99.8337782 | 46 | 100 | 0.485361 | 0.166222 | |||||

| 57.41563756 | 42.3547116 | 58 | 42 | 0.584362 | 0.354712 | |||||

| 80.32558877 | 208.104854 | 80 | 208 | 0.325589 | 0.104854 | |||||

| 22.99597131 | 52.210583 | 23 | 52 | 0.004029 | 0.210583 | |||||

| 35.14187397 | 180.953798 | 35 | 181 | 0.141874 | 0.046202 | |||||

| 227.5075306 | 141.768338 | 228 | 142 | 0.492469 | 0.231662 | |||||

| 173.3966707 | 226.951393 | 173 | 227 | 0.396671 | 0.048607 | |||||

| 47.01195681 | 224.895651 | 47 | 225 | 0.011957 | 0.104349 | |||||

| 136.650702 | 208.739956 | 137 | 208 | 0.349298 | 0.739956 | |||||

| 188.2468657 | 65.2110463 | 188 | 65 | 0.246866 | 0.211046 | |||||

| 208.1607909 | 123.1026 | 209 | 123 | 0.839209 | 0.1026 | |||||

| 35.02876316 | 138.731433 | 35 | 139 | 0.028763 | 0.268567 | |||||

| 210.7650967 | 225.019992 | 211 | 225 | 0.234903 | 0.019992 | |||||

| 68.89331288 | 125.394012 | 69 | 125 | 0.106687 | 0.394012 | |||||

| 23.20818217 | 244.182491 | 24 | 244 | 0.791818 | 0.182491 | |||||

| Average | 0.384953 | 0.297556 |

We see that there is little difference between the actual and the calculated values which means our calculated matrix a is approximately correct.

Now we use the calibration matrix a to predict the [Yi, Zi] coordinates of a known [Xw, Yw, Zw] coordinates then we compare it to the actual values. The results are below.

| Xw | Yw | Zw | Yr | Zr | Yi | Zi | 0.384953 | ||||||

| 7 | 0 | 10 | 23.18406822 | 222.368334 | 23 | 222 | 0.184068 | ||||||

| 0 | 0 | 11 | 101.6775298 | 250.622298 | 102 | 250 | 0.32247 | ||||||

| 0 | 8 | 0 | 242.8889262 | 19.3613035 | 242 | 19 | 0.888926 | ||||||

| 0 | 7 | 3 | 225.8096953 | 81.2166586 | 226 | 81 | 0.190305 | ||||||

| 0 | 6 | 7 | 209.1941664 | 163.542911 | 209 | 163 | 0.194166 | ||||||

| 0 | 7 | 11 | 230.3987701 | 244.881633 | 230 | 245 | 0.39877 | ||||||

| Average | 0.363118 |